TRENT ROBERTSON

Webflow developer, animator, & product designer

Menu

Research & Discovery

I conduct user research—interviews, surveys, usability tests, and analytics reviews—to uncover user needs and pain points. I analyze both qualitative and quantitative data, then synthesize the findings into personas, journey maps, and problem statements that frame design opportunities.

Strategy & Planning

I translate insights into design strategies that align with business goals. I define experience objectives, success metrics, and partner with product managers to scope features and prioritize work effectively.

Experience Design

I create wireframes, interaction flows, and prototypes to bring ideas to life. I also structure clear information architecture and ensure accessibility and responsive design across all platforms and devices.

Collaboration & Execution

I work closely with engineers to ensure a smooth handoff from design to development. I partner with visual designers, researchers, and product managers to refine solutions, and I facilitate design workshops and critiques to strengthen collaboration.

Testing & Iteration

I lead usability testing, A/B experiments, and concept validation. I iterate on designs based on data and feedback, always balancing quick fixes with a longer-term vision.

Leadership & Mentorship

I mentor junior designers, provide constructive feedback, and advocate for design thinking within the organization. I also present design work and influence leadership by connecting design strategy to business outcomes with clear storytelling.

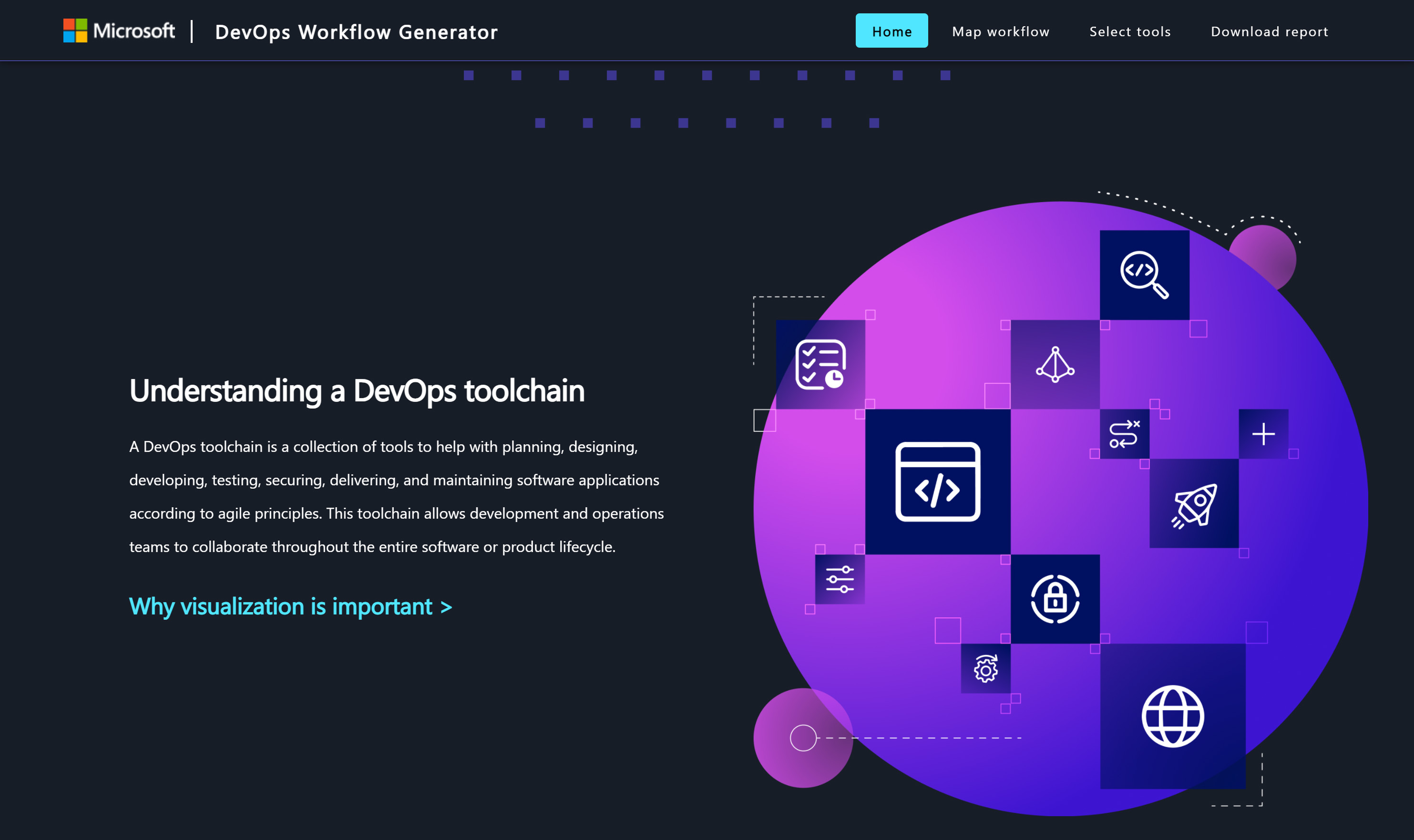

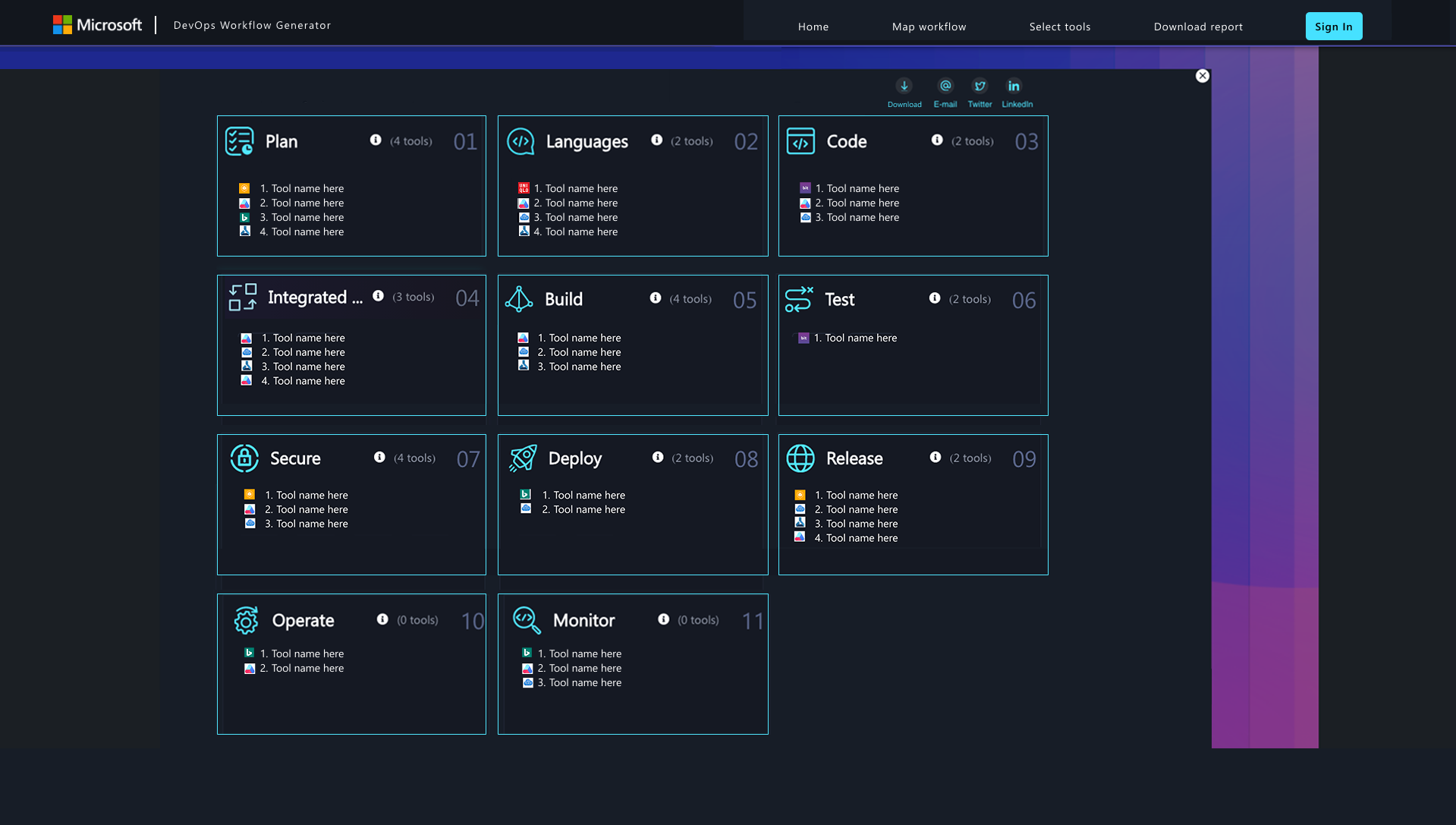

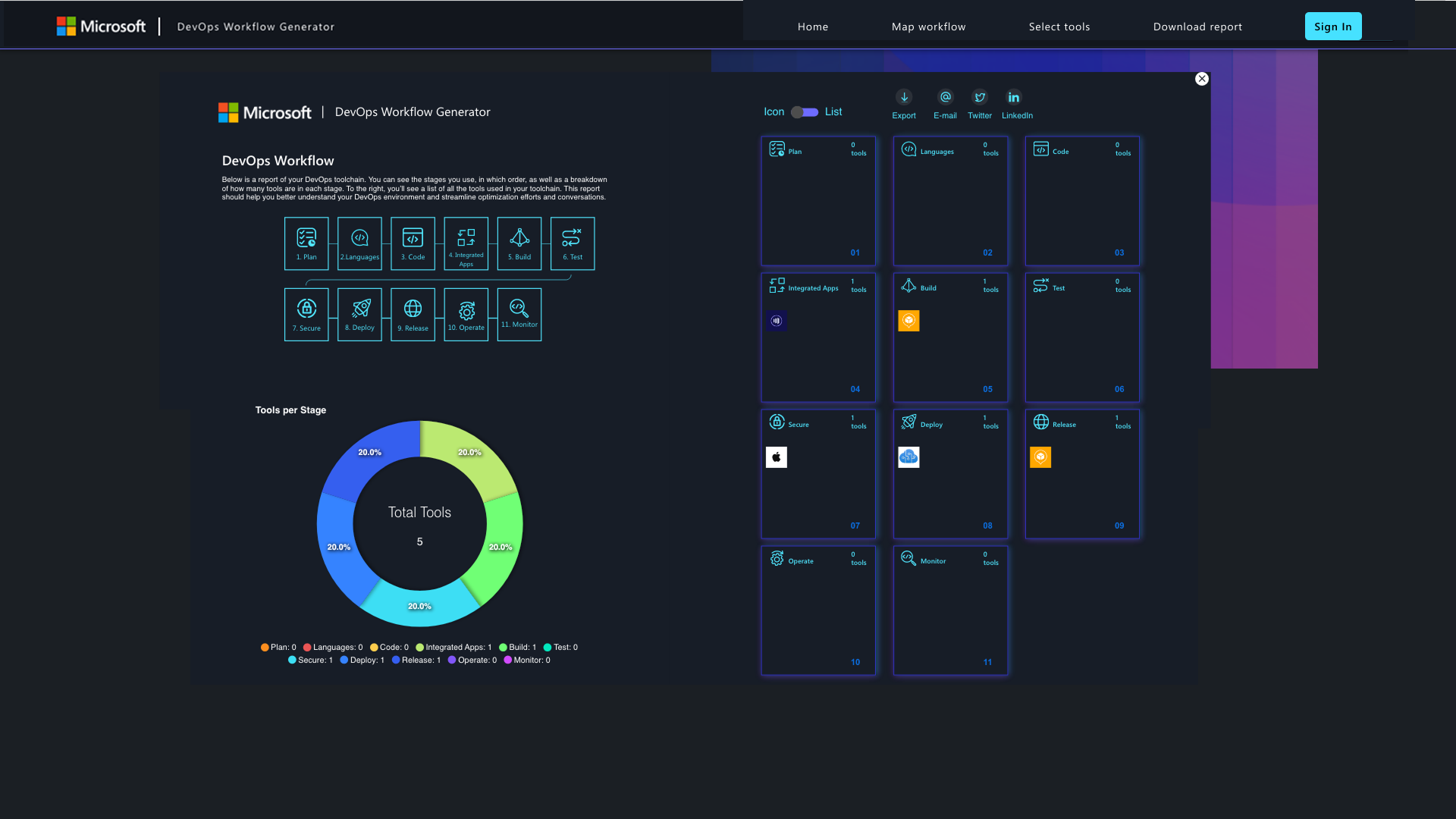

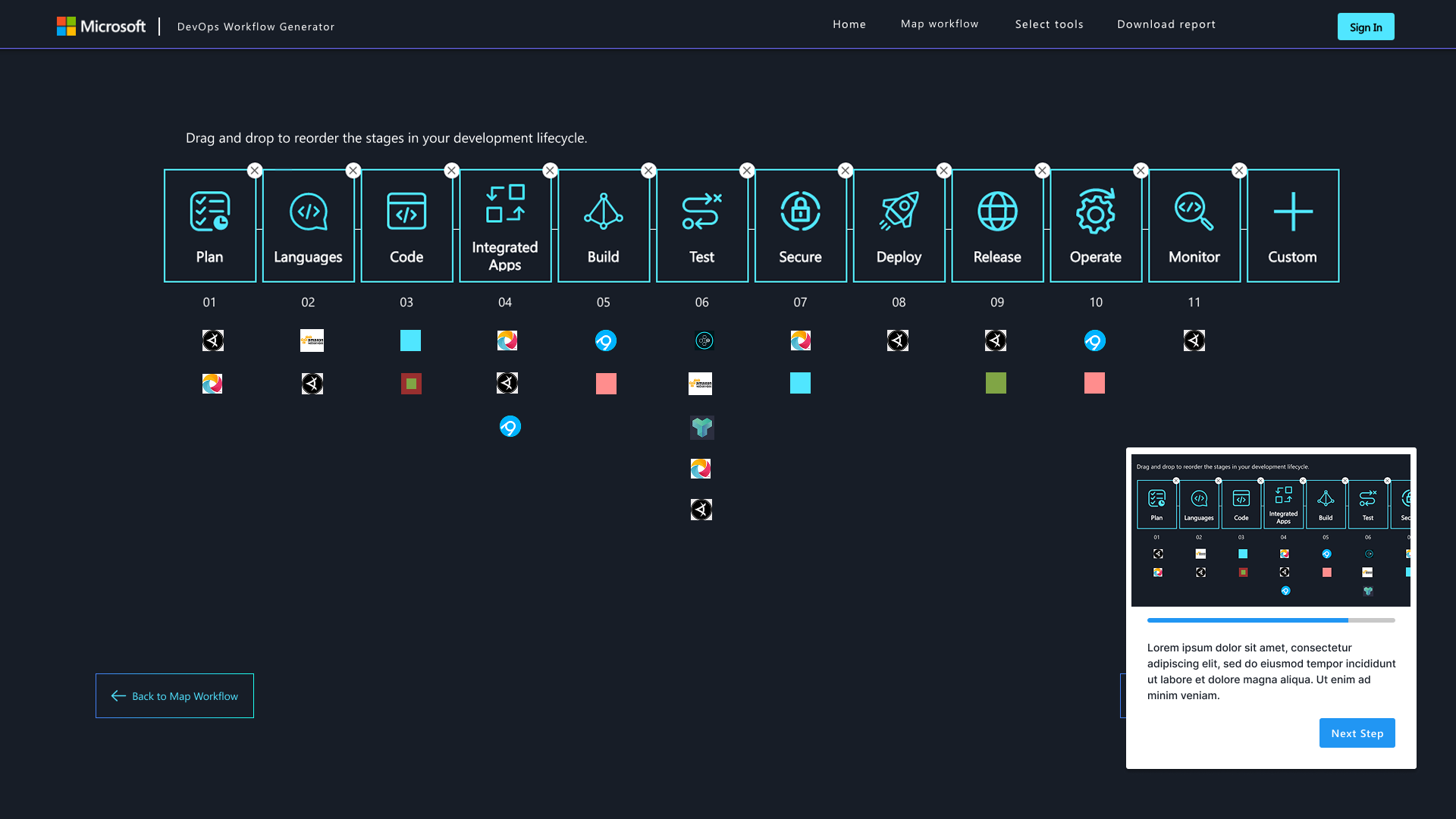

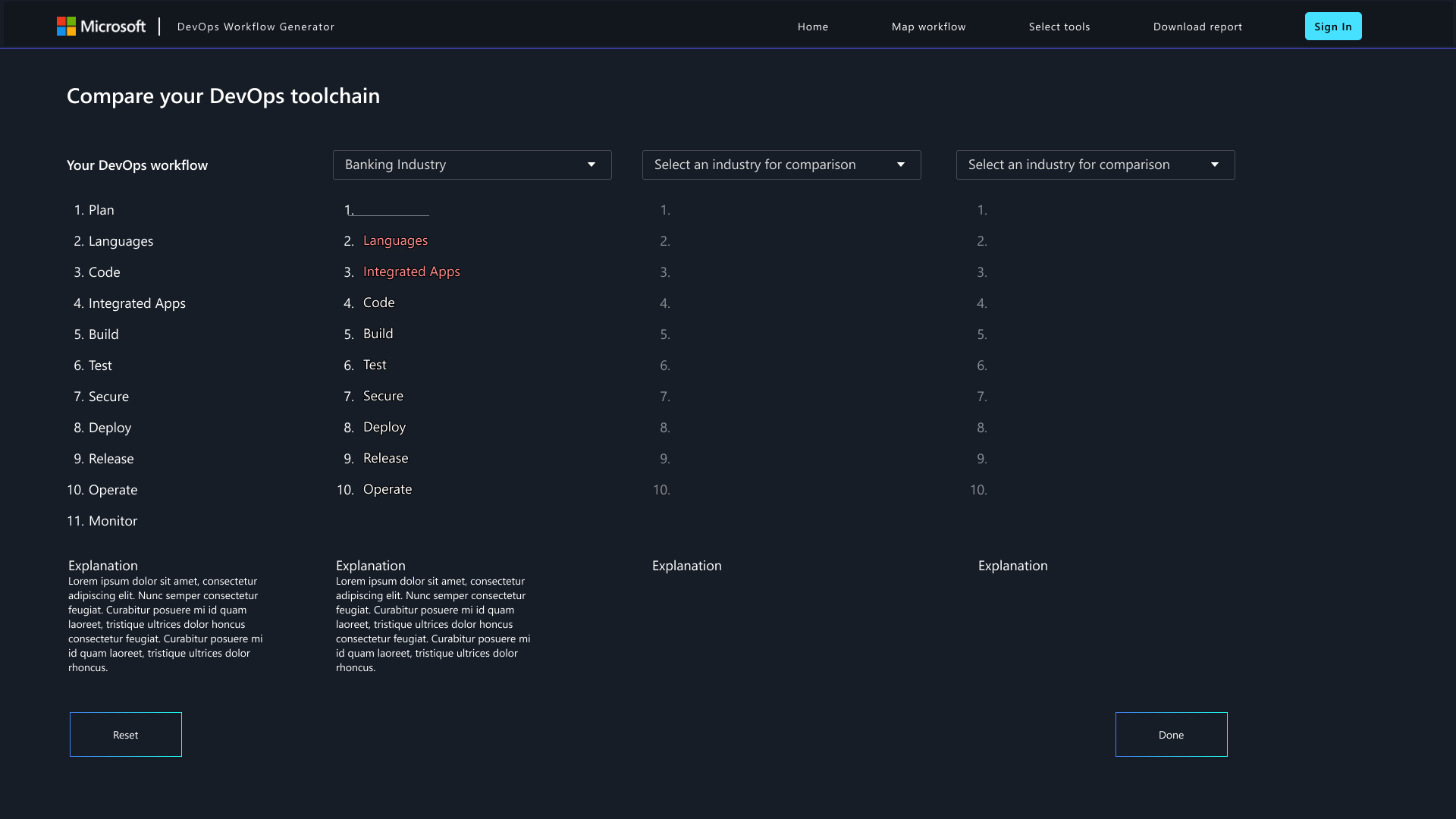

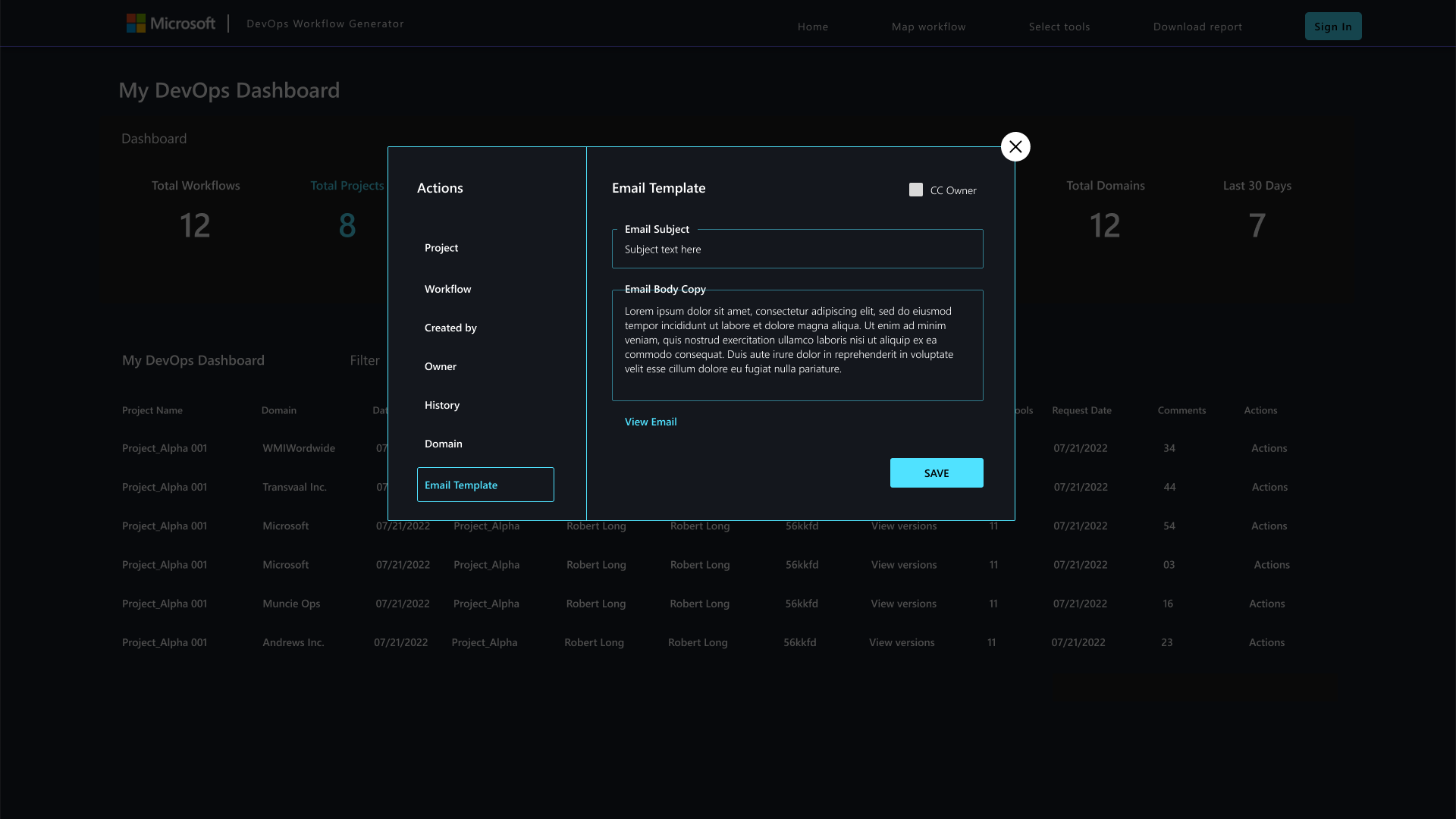

Microsoft’s DevOps Toolchain Generator was not ready for wide circulation because its interface was confusing, inconsistent, and created unnecessary cognitive load for enterprise managers making high-stakes decisions. As Lead UX/UI Designer, Microsoft asked me to reimagine the experience through user research, workflow audits, and a modular design system that emphasized clarity, trust, and scalability. The redesign reduced onboarding time, cut abandonment rates, and boosted adoption among DevOps managers, while positioning Microsoft to easily add new integrations in the future.

As a DevOps manager at a large enterprise, every tool you choose shapes not just workflows, but long-term trust with your teams and leadership. Poor experiences don’t just waste time—they erode confidence, damage credibility, and weaken loyalty to the platform. Microsoft’s DevOps Toolchain Generator promised to simplify decision-making and build confidence, but its dense UI, unclear logic, and vague labels left users frustrated and hesitant to return.

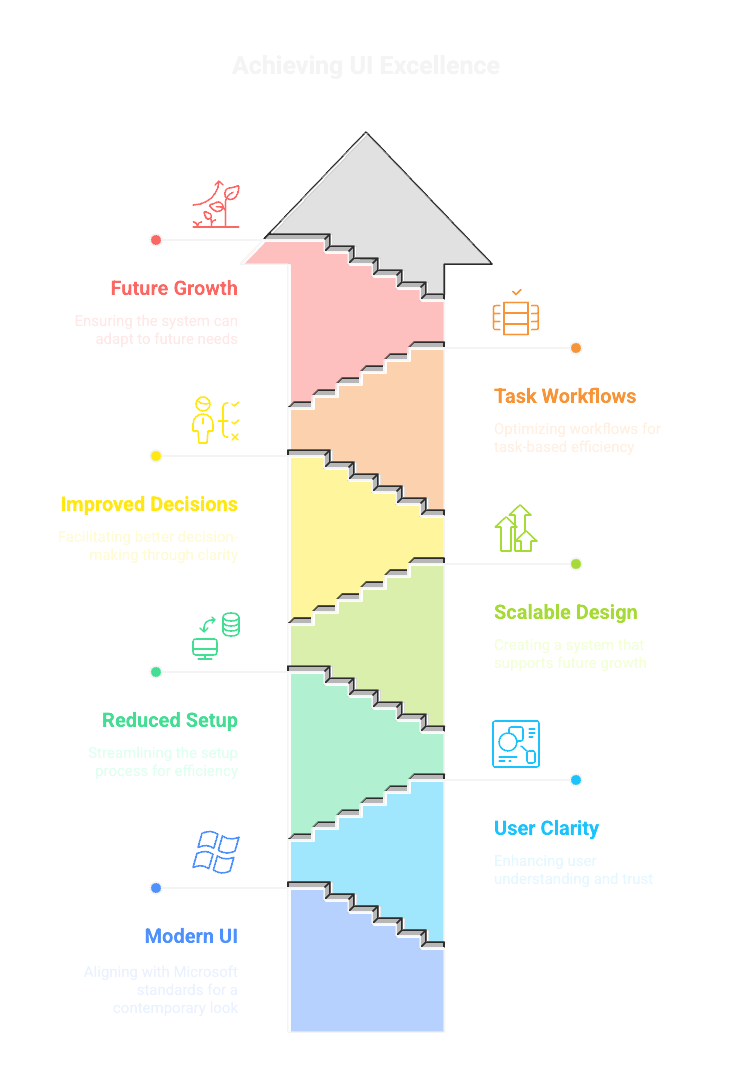

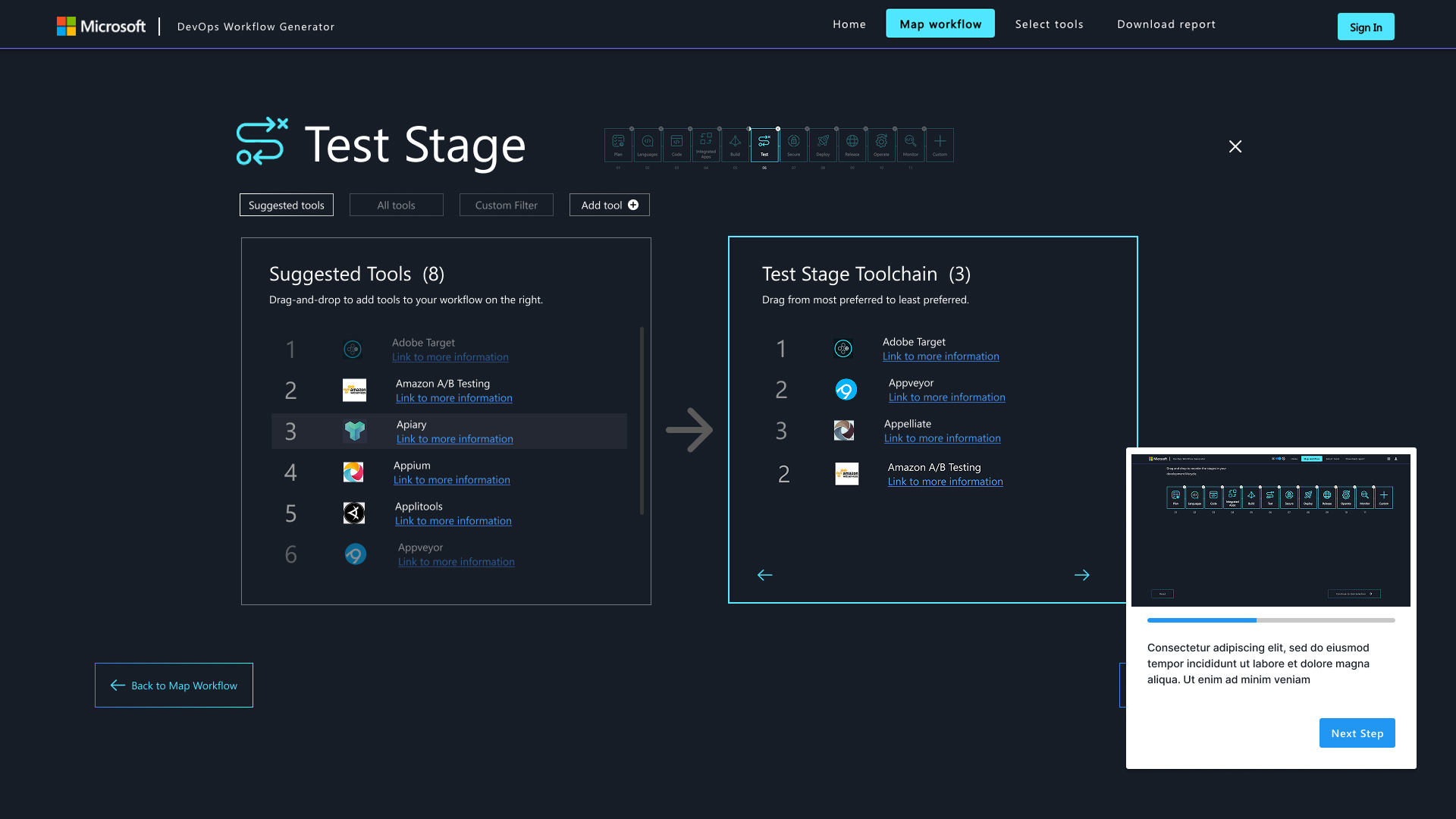

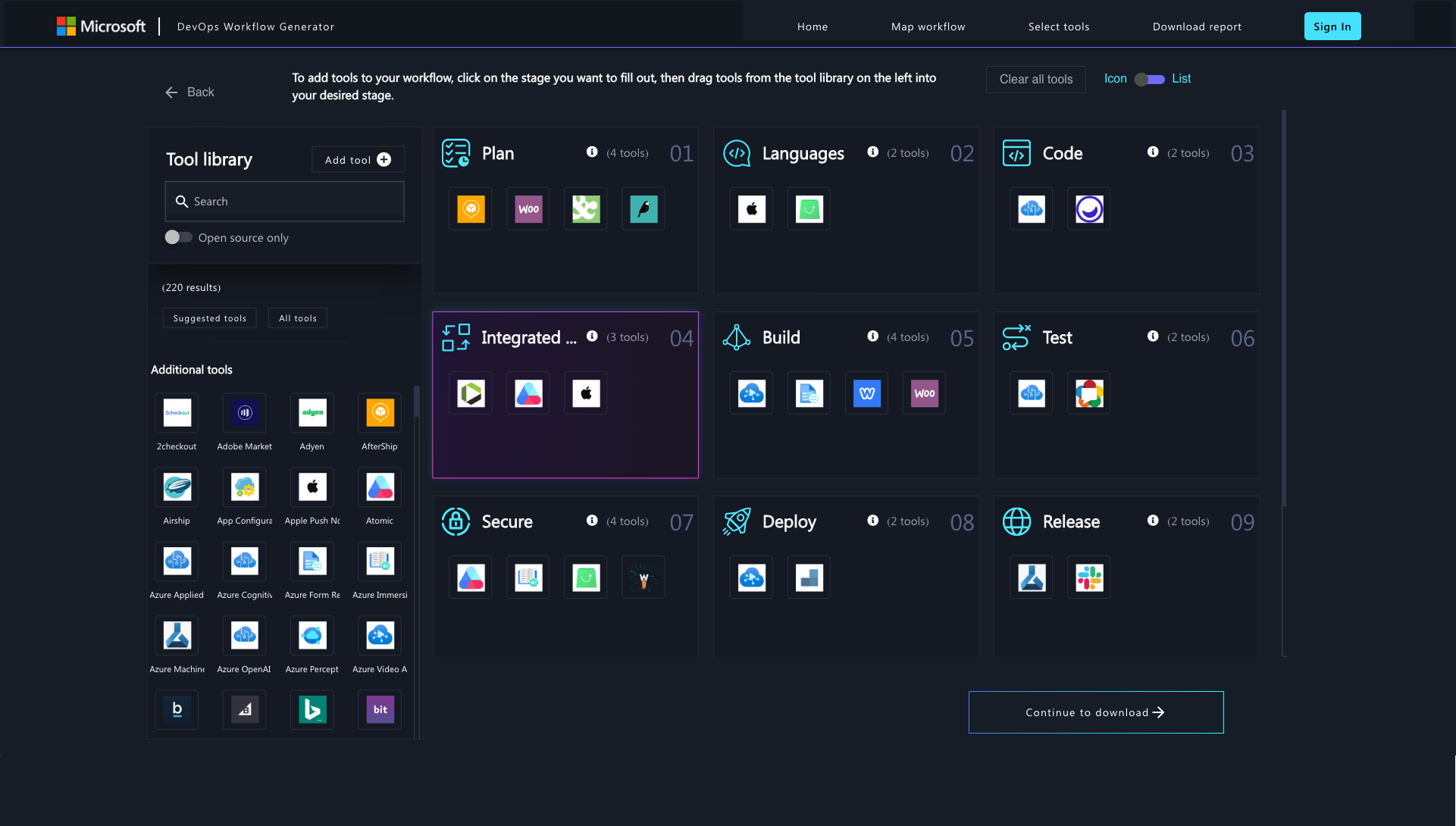

I led a complete redesign focused on rebuilding trust and loyalty. Through stakeholder interviews and workflow audits, I uncovered the friction points undermining user confidence. I then rebuilt the end-to-end experience: clear language that inspires confidence, streamlined navigation that reduces friction, and a modular, scalable design system consistent with Microsoft’s brand standards.

The outcome was more than a polished tool—it was a platform that encouraged repeat use, reduced churn, and strengthened customer loyalty. Managers could now make confident, strategic decisions quickly, knowing the tool was reliable, intuitive, and designed with their success in mind.

As Lead UX/UI Product Designer & Strategist, I was responsible from discovery through high-fidelity design, aligning user needs and business goals while ensuring the design system could scale for future tool adoption.

The Microsoft DevOps Toolchain Generator is part of Microsoft’s enterprise offering for DevOps/engineering teams. The goal was to streamline how enterprise DevOps managers design and automate toolchains/workflows—making decisions about which integrations, pipelines, and tools to use. But the webapps design had problems.

The experience was cumbersome: An unclear value proposition, overloaded UI, vague labels, confusing logic, inconsistent patterns, which led to anxiety, low confidence in decisions, and delayed adoptions.

The users simply were not properly understood.

This was because the user persona wasn’t just “any developer.” It was specifically the DevOps manager or engineering leader deciding whether to adopt and configure the tool. Their biggest concerns weren’t only about usability, but also about long-term confidence in the decision:

High-stakes decision: Selecting a DevOps toolchain commits a team to specific workflows, integrations, and costs. A poor decision can negatively impact the entire organization, potentially harming the enterprise's business and the manager's career and reputation.

This insight shaped our design strategy. We didn’t just simplify screens; we embedded clearer language, transparent decision logic, and contextual guidance so users felt their choices were well-supported by Microsoft. That reduced anxiety and increased adoption.

High-stakes decision: Choosing a DevOps toolchain locks a team into workflows, integrations, and costs. If the manager makes a “bad call,” it impacts the entire org.

• Tell me about your role and responsibilities related to DevOps toolchains.

• How long have you been in this role, and what’s your team size?

• What’s your level of decision-making authority when it comes to selecting DevOps tools?

• Which other roles or stakeholders do you collaborate with when making toolchain decisions?

• What does success look like for you in managing DevOps pipelines?

• Walk me through how you currently create or configure a toolchain.

• What steps take the most time in your current process?

• Which tools or integrations are must-haves in your workflow?

• How do you usually learn about or evaluate new tools?

• What bottlenecks or frustrations occur most often during setup or configuration?

• Have you ever abandoned a setup before completion? Why?

• What parts of your current toolchain generator feel confusing or unclear?

• Where do you encounter errors or mismatches most often?

• Do you feel confident that your toolchain is configured correctly after setup? Why or why not?

• What makes you hesitate or second-guess choices when selecting tools or integrations?

• How important is it for you to feel that Microsoft (or the vendor) supports your choices?

• What would increase your confidence that the tool is reliable and future-proof?

• What kind of documentation, validation, or support do you look for before finalizing decisions?

• Do you feel the system explains the impact of each decision well enough?

• What would make you trust AI/automation recommendations more in this process?

• What would encourage you to use this tool more frequently?

• Are there specific features or integrations that would make the tool indispensable for you?

• How do you measure whether a toolchain is successful after implementation?

• What’s one thing that would make onboarding to this product easier for you or your team?

• If you could wave a magic wand, what’s the one improvement that would save you the most time or frustration?

Let’s bring your ideas to life! Share your vision, goals, and requirements, and I’ll craft a tailored solution that meets your needs.